Fast DeepSeek API for Reasoning and Code

NetMind's DeepSeek API delivers lightning-fast inference at great prices for large language models—perfect for tasks like logical reasoning, code generation, and function calling. It handles inputs of up to 128 000 tokens and offers reliable, high-speed results through a straightforward API. Bring DeepSeek-R1-0528 into your application now for instant, scalable LLM inference!

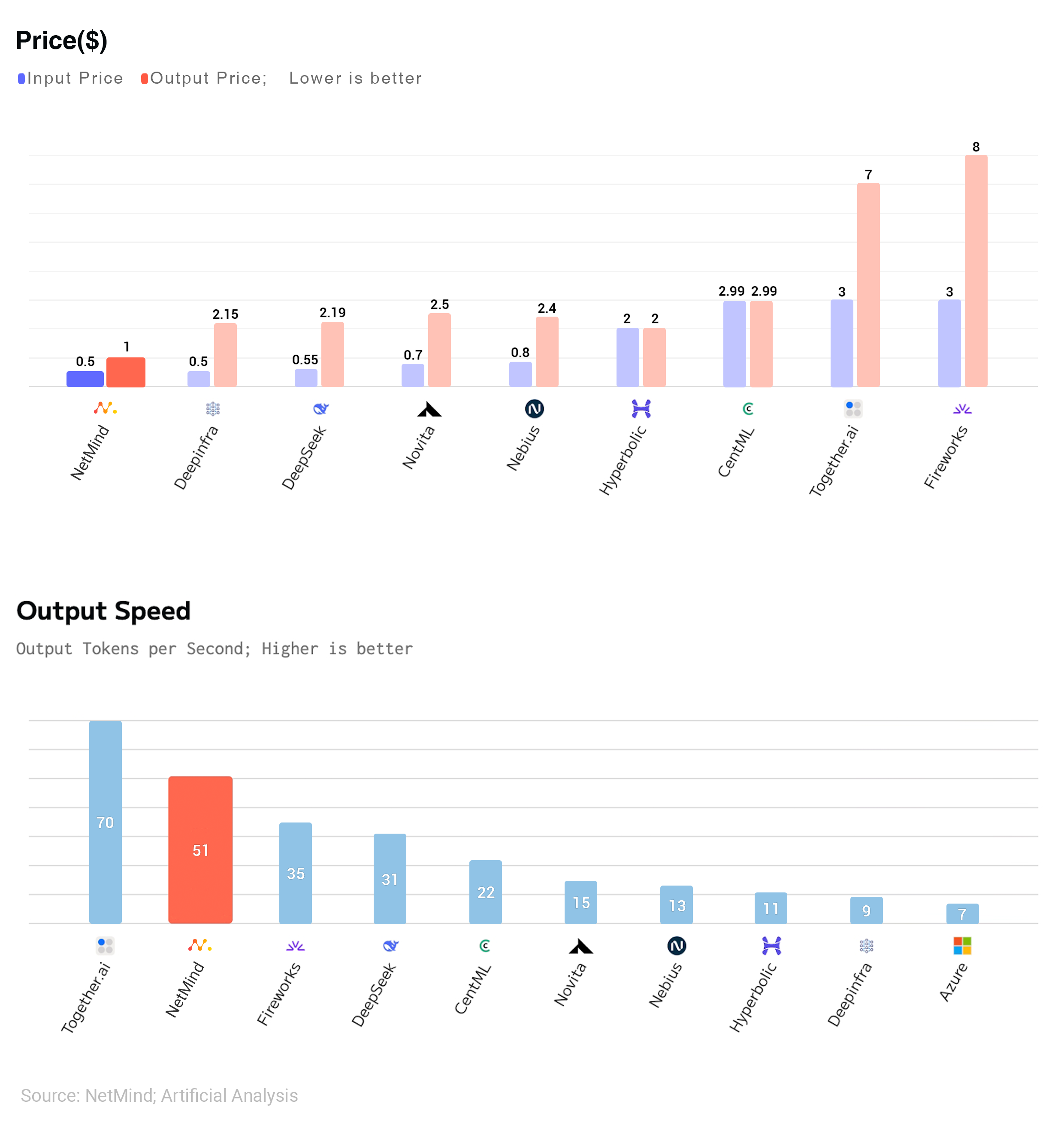

Want a deeper look at how different DeepSeek API providers compare?

To use the API for inference, please register an account first. You can view and manage your API token in the API Token dashboard.

All requests to the inference API require authentication via an API token. The token uniquely identifies your account and grants secure access to .

When calling the API, set the Authorization header to your API token, configure the request parameters as shown below, and send the request.

We offer one of the fastest and most cost-effective DeepSeek APIs available. With top-tier performance, developer-friendly features, and full support for function calling, our self-hosted platform delivers scalable, reliable inference without hidden costs. Start using our DeepSeek API instantly.

Want to learn more about how DeepSeek APIs can power your AI? Check out our test of DeepSeek here!